#Lexer generator algorithm code#

flex file, and then use its identifier.Ĭool_yylval.symbol = inttable.add_string(yytext) Īn executable code here is run when a lexeme is captured by regex. It's possible to describe a regex at the beginning of a. Flex always uses a greedy regex algorithm, capturing characters from an input text while they match a regex.įlex performs rule checking in the order they are located, so a rule with higher priority must be located first.Ī lexer must not fall to a segfault, it must process any chains of input symbols, including erroneous, and, if a chain doesn't correspond to any lexeme of a language, get a special token for an error, to let a parser print corresponding error message with a number of a source text line containing an error. The next thing we need to describe a rule is a regular expression, which captures a lexeme. The main program text is processed in the INITIAL state, states COMMENT and LINE_COMMENT are for comments, obviously, and the state STRING is intended for string literals processing. In some computer languages, like Pascal, we need more states for comments, because Pascal has two bracket forms of comments, (*comment*) and, and one-line comment (//comment), like C/C++ lang does. In most cases, for parsing a real computer language text, we need some additional states, for example, COMMENT, LINE_COMMENT, and STRING.

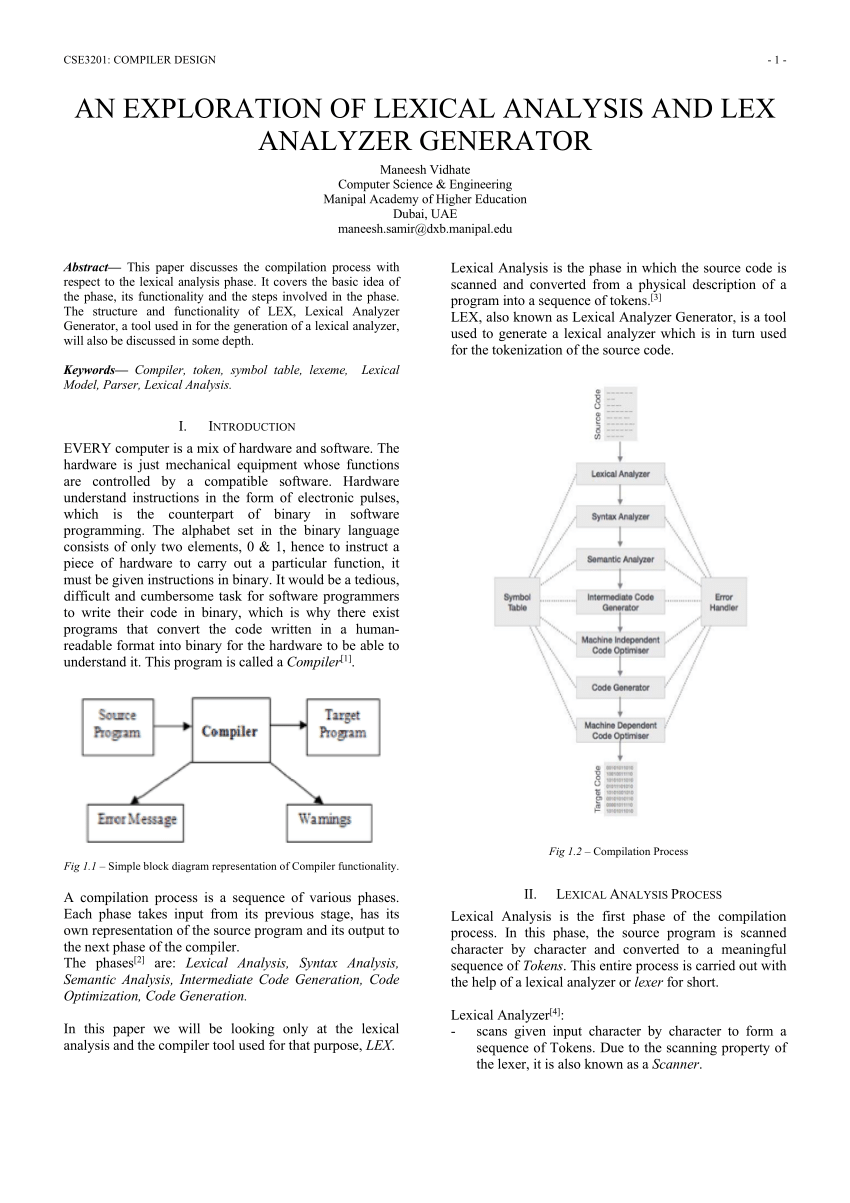

In Flex, it's a default state and doesn't require an explicit declaration. So, what is a state? We can consider a lexer as a finite state machine. Use P+ to create your own parser with informative error messages, and optimized algorithm. To describe a rule, it's necessary to have three things: a state, a regular expression, and executable code. Introducing P+, a parser generator derived from nearley.js. In this article, we'll examine how it works in general, and observe some nontrivial nuances of developing a lexer with Flex.Ī file, describing rules for Flex consists of rules. If we define some parsing rules, corresponding to an input language syntax, we get a complete lexical analyzer (tokenizer), which can extract tokens from an input program text and pass them to a parser. It's possible to write a lexer from scratch, but much more convenient to use any lexer generator.

A lexical analyzer (also known as tokenizer) sends a stream of tokens further, into a parser, which builds an AST (abstract syntax tree). It gets an input character sequence and finds out what the token is in the start position, whether it's a language keyword, an identifier, a constant (also called a literal), or, maybe, some error. mental lexer with the incremental parser (needed for such languages as C.

It's used for getting a token sequence from source code. No changes to the generators algorithms or run-time. Lexical analysis is the first stage of a compilation process.

0 kommentar(er)

0 kommentar(er)